![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Code and Algorithm

The present page contains comments to version ac30 of the code for computing ARON values of the capillary routing layers. In the mean time the code progressed to version ac36 (included in the download page) which has slight changes compared with this one.

|

Script |

Comments |

|

reset; param

ver symbolic = "ac30"; param

T integer, >=1; param

N integer, >=2; param

layers{1..T} default 0; param

pump{t in 1..T, 1..layers[t]}; set

BOTTLENECKS{t in 1..T,1..layers[t]} dimen 2; set

PATH{t in 1..T,1..layers[t]} dimen 2; set

INITLINKS{1..T} dimen 2; param

load{t in 1..T, l in 1..layers[t], (i,j) in PATH[t,l]} >=0; data

dump/path.txt; |

◄The parameter T – number of timeframes; parameter N – number of nodes; parameter layers – number of capillary routing layers for each timeframe; parameter pump – the flow increase factor at every layer of each timeframe; set BOTTLENECKS – the set of bottleneck links of every layer of each timeframe; set PATH – the set of flow’s non-bottleneck path links of every layer of each timeframe; set INITLINKS – the set of ad-hoc true network links for each timeframe; and the parameter load – the relative value of load of all links of every layer for each timeframe; all these parameters and sets are read from the data file dump/path.txt, prepared by the capillary builder capillary.txt. |

|

commands

aron-config.txt; param

bottlnum{t in 1..T, l in 1..layers[t]} = card(BOTTLENECKS[t,l]); param

incrabs{t in 1..T, l in 1..layers[t]} = (if l>=2 then incrabs[t,l-1] else

1)*pump[t,l]; param

eps=1e-8; |

◄File aron-config.txt contains parameter M – the number of media packets in the block; parameter DER (stands from Decoding Error Rate) – the permitted probability of decoding failure at the receiver; chunks – the number of chunks over which all timeframes must be evenly broken (ARON performance is computed over all timeframes in a chunk). Parameter bottlnum contains number of bottlenecks in each layer of a timeframe. Parameter incrabs contains the absolute value of the flow increase factor. This parameter is defined recursively through the pump factor and the incrabs value of the previous layer. |

|

set

TC = #

{0} union #

setof{i in 5..3 by -1} round(10^-i,10) union setof{i in 0.033..0.08 by 0.003}

round(i,10) within interval [0,1); |

◄Set TC contains samples of the tolerance values. The value of the tolerance is the percentage of losses (from 0 to 1) permitted at the receiver, at which the incoming media stream is still considered as being useful. Performance of capillary routing is benchmarked under various values of the tolerance of media. |

|

set

P = TC union setof{t in 1..T, l in 1..layers[t]:

incrabs[t,l]>1} 1/incrabs[t,l] union setof{t in 1..T, l in 1..layers[t],(i,j)

in PATH[t,l] diff BOTTLENECKS[t,l]: incrabs[t,l]>1} load[t,l,i,j]/incrabs[t,l]; |

◄Every time due to a network failure there is a p percent loss at the receiver side; thus the sender must adaptively transmit some extra redundancy needed for the compensation of the random loss p at the receiver. The transmission amount as a function from p will be used through a pre-computed table. Set P contains all values of p (from 0 to 1), for which this function must be pre-computed. Loss may occur due to failure of bottleneck links and non-bottleneck path links of the capillary routing. Thus all diverse values of flow carried by the links of the path (the load values of the bottleneck links and the non-bottleneck path links) must be included into the set P. |

|

#===FEC=== param

|

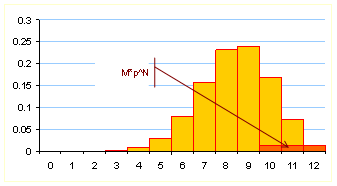

◄Starting the computation of the length of the FEC (Forward Error Correction) codeword maintaining, at a given random loss rate p, the probability of the decoding failure equal to DER. Details for computation of the FEC function are in 051026-fec-overhead. Let M be the

original number of the media packets per block and let N be the number of packets (including the redundant packets) actually

transmitted in the block (

Binomial distribution for Therefore N at

which |

|

param

maxN_probe{p in P, pr in 1..4} = param

Binomial_probe{p in P, pr in 1..4} = if M>=2 then prod{i in 1..M-1} ( (maxN_probe[p,pr]-M+1+i)/i * p^((maxN_probe[p,pr]-M+1)/(M-1))*(1-p) ) else p^maxN_probe[p,pr]; param

maxN{p in P} = maxN_probe[p, min{pr in 1..4: Binomial_probe[p,pr]<=DER/M}

pr]; |

◄For the role of the upper

bound for the length of the codeword, we can take a value N at which the value of the binomial

distribution

Binomial distribution with We examine a few probe values for N which are two, four, etc, times longer the lower bound. The

first value of N in this sequence

for which |

|

set

NN{p in P} = { |

◄Set NN contains all values of the length of the codeword (from the lower bound to the upper bound) that are to be examined |

|

param

Binomial1{p in P, Ntr in NN[p]}= if M>=2 then prod{i in

1..M-1}((Ntr-M+1+i)/i*p^((Ntr-M+1)/(M-1))*(1-p)) else p^Ntr; |

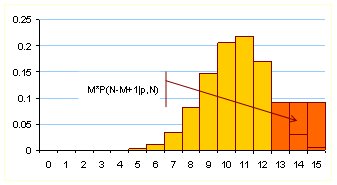

◄Parameter Binomial1 contains, for each N

between the lower bound and the upper bound, the value of the binomial

distribution at |

|

param

pbyq{p in P} = p/(1-p); param

Binomial{p in P, Ntr in NN[p], n in Ntr-M+1..Ntr} = if n=Ntr-M+1 then Binomial1[p,Ntr] else Binomial[p,Ntr,n-1]*(Ntr-n+1)/n*pbyq[p]; |

◄For each n between |

|

param

Failure{p in P, Ntr in NN[p]} = sum{i in Ntr-M+1..Ntr}Binomial[p,Ntr,i]; |

◄The probability of a failure

in decoding of a codeword of the length N

is the sum of the probabilities of |

|

param

goodN{p in P} = min{Ntr in NN[p]: Failure[p,Ntr]<=DER} Ntr; |

◄The number of packets in the codeword is computed as the minimal length at which the decoding failure probability is still less than DER. |

|

param

FEC{p in P} = if goodN[p]-1 in NN[p] and

Failure[p,goodN[p]-1]>DER then goodN[p]-1 + (log(Failure[p,goodN[p]-1]) -

log(DER)) / (log(Failure[p,goodN[p]-1]) -

log(Failure[p,goodN[p]])) else goodN[p]; |

◄Parameter FEC contains the interpolated smooth (non-integer) values of the

length of the codeword. The non-integer FEC

is computed using the failure value at N

(at which the failure is lower than DER),

the failure value at |

|

#=== #param

FEC{p in P} = M + if p=0 then 0 else log(DER/M)/log(p); |

◄End of pre-computing of the function FEC. Coarse approximation of FEC (commented). See Section VI of 051026-fec-overhead for comparison of the coarse approximation with the true FEC function. |

|

param

ARN{tc in TC, p in P} = if p<=tc then 0 else

(FEC[p]-FEC[tc])/FEC[tc]; |

◄Parameter ARN (Adaptive Redundancy Need) is the amount (in percentage) of

additional redundancy to be transmitted by the sender in order to recover p percent losses observed at the

receiver. Parameter ARN is indexed

by various media tolerance values (from the set TC). If the media stream is already equipped with a tolerance t, we assume then that for every

initial chunk of M media packets

sender actually transmits into the network |

|

param

benchmark_start; param

benchmark_stop; param

benchmark_info symbolic; param

logrec symbolic = sprintf("%s, %s, %s, done in %f

seconds", ver,sub(ctime(),"^ *[^ ]*

*",""),benchmark_info,benchmark_stop-benchmark_start); param

logfile symbolic = "aron.log"; |

◄The parameters for recording the benchmarking information. |

|

param

aron_b{tc in TC, t in 1..T, l in 1..layers[t]} = (if l >=2 then aron_b[tc,t,l-1]) + if incrabs[t,l]>1 then

bottlnum[t,l] * ARN[tc,1/incrabs[t,l]]; |

◄ARON stands from the Adaptive Redundancy Overall Need,

i.e. it is an aggregate need of redundancy in case of sequential

(non-simultaneous) failures of every link on the path of the flow. ARON is the measure of the efficiency

of a routing toward network failures. The parameter aron_b stores the portion of ARON

contributed by the bottleneck links. This parameter is indexed by a set of

various media tolerances, by the timeframe and by this timeframe’s capillary

routing layers. Parameter aron_b is

computed recursively: at the layer l

its value is equal to the sum of its value at the previous layer |

|

param

aron_p{tc in TC, t in 1..T, l in 1..layers[t]} = sum{(i,j) in PATH[t,l] diff

BOTTLENECKS[t,l]: incrabs[t,l]>1} ARN[tc,load[t,l,i,j]/incrabs[t,l]]; |

◄Parameter aron_p contains the portion of ARON contributed by path’s non-bottleneck links. Layers for which incrabs is equal to 1, i.e. when flow contains critical links, are skipped in computation. |

|

param

aron{tc in TC, t in 1..T, l in 1..layers[t]} = aron_b[tc,t,l]

+ aron_p[tc,t,l]; |

◄Finally the measure ARON for (i) a media stream equipped with a certain tolerance to random

losses and (ii) following a

capillary routing of a given timeframe and layer is the sum of the portion of

ARON contributed by bottleneck

links and the portion of ARON contributed

by non-bottleneck path links. For layers whose bottlenecks are critical links

carrying the entire flow, the ARON

is set to zero. In the layers, successive to such layer, ARON is computed as if the critical links are removed from the

network. |

|

param

shift{t in 1..T} = if pump[t,1]<=1 then 1 else 0; |

◄Parameter shift is set to 1 if the timeframe has a critical primary bottleneck or if there is no flow possible. |

|

param

chunk = (T + chunks-1) div chunks; |

◄Parameter chunk contains the number of timeframes per chunk. |

|

set

FRAMES{i in 1..chunks} = {t in (i-1)*chunk+1..min(i*chunk,T):

1+shift[t] in 1..layers[t]}; |

◄Set FRAMES is indexed by chunks and contains all valid timeframes to be comprised within each chunk. Timeframes having no feasible flow or having only one layer consisting of only single (critical) path are removed from the chunks. |

|

param

frames{i in 1..chunks} = card(FRAMES[i]); |

◄Parameter frames contains the number of valid timeframes in each chunk. |

|

set

CHUNKS = {i in 1..chunks: frames[i]>=1}; |

◄Set CHUNKS comprises of only those chunks, which contain at least one valid timeframe. |

|

param

maxlayers{i in CHUNKS} = max{t in FRAMES[i]} (layers[t] - shift[t]); |

◄Parameter maxlayers indexed by the elements of the set CHUNKS contains the maximal number of layers for the timeframes of the chunk. If the first layer of a timeframe must not be used, its layers are shifted down by one (the last layer is then duplicated). |

|

#average

ARON per frame param

afaron{tc in TC, i in CHUNKS, l in 1..maxlayers[i]} = 1/frames[i] * sum{t in FRAMES[i]}

aron[tc,t,min(l+shift[t],layers[t])]; |

◄The average ARON for a given fixed layer, over all timeframes of a chunk is stored in the parameter afaron. If the first layer of one of the timeframes is not usable, all its layers are shifted down by one. If a timeframe of the chunk does not have the layer, that is being averaged, always the last layer of the timeframe will be used. |

|

#param

links{t in 1..T} = # card(PATH[t,layers[t]]) + sum{l in 1..layers[t]-1}

card(BOTTLENECKS[t,l]); #param

layerlinks1{t in 1..T, l in 1..layers[t]} = if pump[t,l] > 1 then # card(PATH[t,l]) + sum{ll in 1..l-1:

pump[t,ll]>1} card(BOTTLENECKS[t,ll]); #param

layerlinks{t in 1..T, l in 1..layers[t]} = #

layerlinks1[t,min(l+shift[t],layers[t])]; #param

alaron{tc in TC, i in CHUNKS, l in 1..maxlayers[i]} = # (1/sum{t in FRAMES[i]} layerlinks[t,if l

<= layers[t] then l else layers[t]]) * # sum{t in FRAMES[i]}

aron[tc,t,min(l+shift[t],layers[t])]; |

|

|

param

dir symbolic; param

file symbolic = dir & "/aron.csv"; let

dir := sprintf("%s-aron",ver); shell

("rmdir /s /q " & dir); shell

("mkdir " & dir); shell

(" shell

("copy " & ver & "*.txt " & dir); shell

("copy config.txt " & dir); shell

("copy aron-config.txt " & dir); |

◄Creating an empty folder, prefixed with the version number, copying there all text files related with the current version, the capillary builder’s configuration file, and the ARON calculator’s configuration file. |

|

let

benchmark_info := "ARON"; let

benchmark_start := _ampl_time; print

"Benchmarking ",benchmark_info," ..."; |

◄Starting the benchmarking of the computation performance |

|

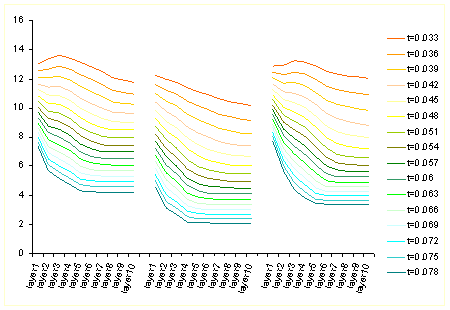

for{tc

in TC} { printf ",t=%s", round(tc,if tc>0 then max(

round(-log(tc)/log(10))+1 ,3)) > (file); } printf

"\n" > (file); |

◄Printing the header of the table |

|

for{i

in CHUNKS} { for{l in 1..min(10,maxlayers[i])} { printf "layer%d",l > (file); for{tc in TC} { printf ",%f", afaron[tc,i,l]

> (file); } printf "\n" > (file); } printf "\n" > (file); } close

(file); |

◄Printing the table containing for each chunk’s layer the average ARON value over all timeframes of the chunk.

|

|

let

benchmark_stop := _ampl_time; reset data logrec; print logrec; print logrec >> (logfile); close

(logfile); |

◄End of the benchmarking; recording the results of the benchmarking into a log file. |

* * *